InetSoft Webinar: Deploying a Data Science Solution

Below is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of Machine Learning and Healthcare. The presenter is Abhishek Gupta, Product Manager at InetSoft, and the guest is Jim Reynolds, CTO at Health Analytica.

Abhishek: Sure, and as we're looking to the future, not only on the payoffs and the implications of where this is relevant, there's also some architectural changes with the way people are using a hybrid cloud technologies sourcing their data centers in different ways. Tell us a little bit about how you're deploying your data science solution. Are you using this all on premise?

Do your security requirements make that necessary now? Are you into a hybrid mode, or how will that pan out in the future, and how might that impact how you can get more data into a compute mode that you can analyze?

Jim: So, over time there's no doubt that the hybrid or deployment models were some of it will be on premise, and some of it will be in the cloud. This is going to happen in healthcare and healthcare claims, but still as people are seeing in the news, there is still a lot of apprehension around doing healthcare in the cloud, and so a lot of our requirements require us to keep data under lock and key.

Over time as things get more secure, there's no doubt that this hybrid cloud and on premise deployment is going to happen and building an architecture that allows you to segment your data where you can discriminate the things that go out into the cloud versus things that you have to keep private is something that should be top of mind in any effort of this size or scale.

Abhishek: Okay well coming up on half an hour of discussion, and it we do have some questions from our audience and we can still take some more. So if there are any more questions out there, please enter them. Jim we've got a few more moments, and I've got some questions here. One of the question is about the types of data, how you're bringing more kinds of data, and how do you expect that to be done. I guess the question is about variety of data and how you're planning to manage that. .

|

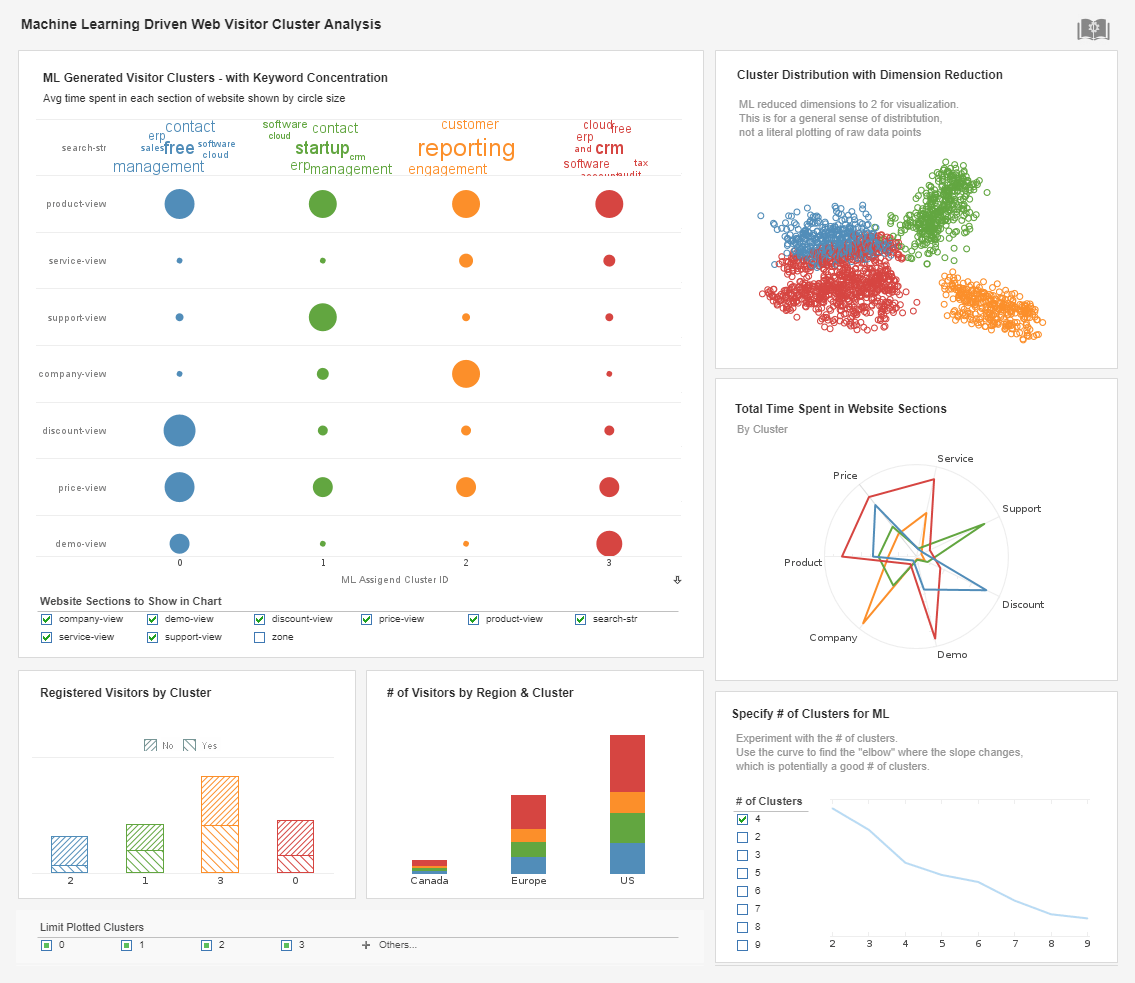

View live interactive examples in InetSoft's dashboard and visualization gallery. |

Jim: Right, so that goes back to the curation part of our pipeline, and the technologies that are required there. Again a lot of the column stores are really well suited for that problem, and so it allows us to keep track of the different types of ideas and different types of varieties, but it's also important that you have technology that lets you integrate well with multiple types of technologies into a processing pipeline.

So one of the benefits that we found with Vertica is that they continue to give API access to the database, and for example, they recently came out with an integration with Kafka. They needed integration with Kafka. That is a big help for us to be able to incorporate streaming data.

In addition to that, the flux store that they've got allows us to do partial analysis where some of the data stays in our structured formats, but it allows us to still do calling their scans of the highly critical data. So having the ability to build out a more complicated architecture is something that a data store should do, and Vertica does pretty nice job with that.

Abhishek: Okay, we have another question here about again I think it's the data type and management issue but it's asking about streaming in real time data. Is that important for you, is that something you'll be doing or do you have more of a batch mentality when it comes to bringing the right value to your clients?

Jim: So, so far streaming has not been super high on our list, but overtime that will be changing without a doubt as more granular bits of data come together to feed real time status updates about particular patients, for example, where you're managing a small number of highly chronically ill people. As streaming data comes in you're going to want to be able to combine it together with the large scale what people traditionally called batch analytics, and it's that fusion of those two cadences of data together that are going to provide a lot of value to our kinds of problem sets and our kinds of customers. So it's going to be both, and that's actually an architectural challenge.

Read what InetSoft customers and partners have said about their selection of Style Scope for their solution for dashboard reporting. |

Abhishek: Sure of that. Another question here is about outputs. How do you get more people to use this? Are you exploring new tools on interfaces. I think, Jim, this gets back to that democratization issue of how do you close the daylight, or remove the daylight between those who want to know and the data and analytics itself.

Jim: Right, so over time the front end of our data is going to become oriented around people who had a job to do, and the investigative job is very different than say an actuarial person who's looking for a population study. So there will be a big variety of user interfaces and user experiences that people will want.

So having a platform that is able to supply both rich APIs as well as rich user experiences is a big part of that and sometimes people are going to just want traditional off the shelf tools. There are plenty of excel users, and so preparing data that can just be move into excel is one example of a very straight forward way of democratizing a data, but there will be other people who want more visualizable approaches to the data, and as this visualization continues to mature and get more sophisticated. Having good APIs that allows you to morph that user experience is really where that the focus needs to be.

| Previous: Example of Data Science Applied to Healthcare |