Information Integration Platform

Below is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of Agile Data Access and Agile Business Intelligence. The presenter is Mark Flaherty, CMO at InetSoft.

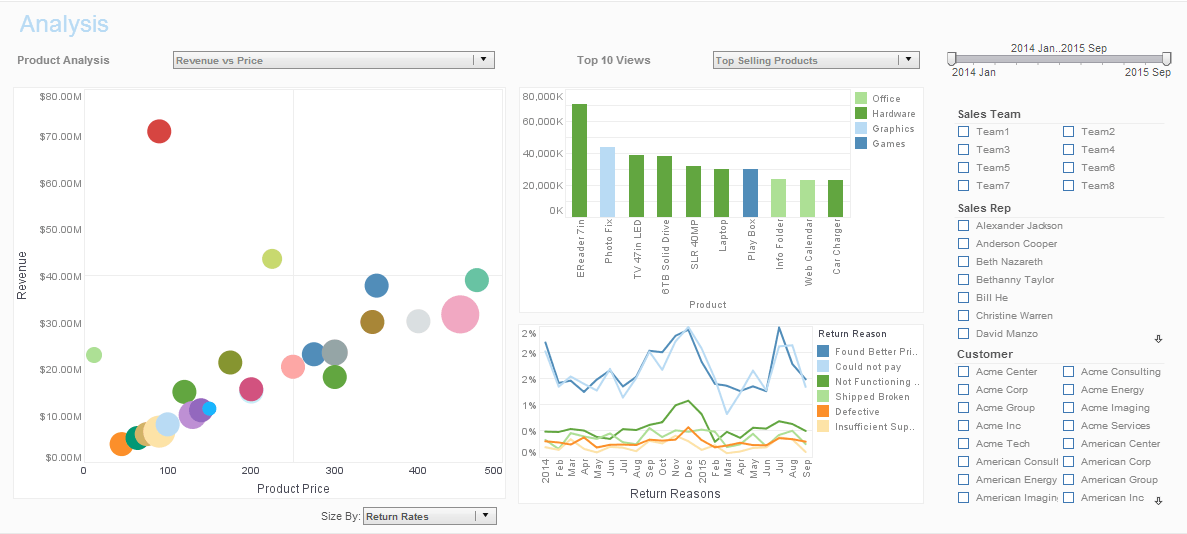

Mark Flaherty (MF): Rich dynamic data services are very agile to change as you are adding and changing core systems, as well as certain new applications and requirements for correlation emerge from the business side. So in this case, the data services platform is feeding, if you will, dashboards virtually.

So rather than replicate all of the data in all of these places into another data mart or data warehouse, a virtual view is presented to create several dashboards around specific business areas.

|

View a 2-minute demo of InetSoft's easy, agile, and robust BI software. |

Then in addition, some external data was also consolidated in a selective way for offline reporting, which they are accessing through our BI tool. So while you have seen three different use cases, the core thing that actually transcends all of them is the idea that you are trying to access and leverage disparate data sources easily, flexibly and at low cost. It could be internal data or external data, and it could come in a variety of formats structured, unstructured, semi-structured, et cetera.

Let me explain just a little bit about how InetSoft addresses that problem. We are starting from a philosophical perspective. We have always believed that an information integration platform has to be holistic. That doesn’t mean that it includes all the functions and has to replace what you have already got.

It’s holistic in the context of being able to leverage all of the investments you have already made but still be able to virtualize data services across them. So we obviously have to think about connectivity to a broad range of sources from structured all the way to highly unstructured.

That’s important not just from a data access perspective but also from the meta model perspective which I will come to in a minute. The second core idea behind InetSoft's innovative thinking is the idea that data mashup eventually will have to be a predominant way of integrating information because the volume of data is growing so fast and the disparity of information is so high that physically replicating and consolidating all the possible information that you would want to use is not going to happen. In the future it's going to happen less and less.

So the moment you realize this, you then start to think about flexible ways of combining virtual real time data with cached data, with scheduled batch movement of data at a very granular node-level rather than think of each of these as sort of an either/or technology.

And the third idea is once you have basically abstracted and delivered these data services, you want to make them very reusable, very fast to implement and maintain. There is a separation, if you will, of a logical data service and the actual management of the data services from an access control security perspective, service levels governance, etc. which means the same logical data service might be accessed with high service levels by the CIO and the CFO, but certain other users might only be restricted access or access during certain times, etc. and all of this obviously there is a lot backend about security, performance, scalability, governance, etc.

Elements of Data Infrastructure Security

Data infrastructure security encompasses a multifaceted approach to safeguarding the integrity, confidentiality, and availability of an organization's data assets. Firstly, authentication and access control mechanisms are fundamental elements of data infrastructure security, ensuring that only authorized users have access to sensitive data and resources. This involves implementing robust authentication protocols such as multi-factor authentication (MFA), role-based access control (RBAC), and identity federation to verify the identities of users and enforce granular access controls based on their roles, privileges, and permissions. By implementing least privilege principles and regularly reviewing access rights, organizations can mitigate the risk of unauthorized access and unauthorized data exposure, thereby enhancing data security. Secondly, encryption plays a critical role in protecting data both at rest and in transit within the data infrastructure. By encrypting data using strong cryptographic algorithms and encryption keys, organizations can prevent unauthorized users from accessing or intercepting sensitive information, even if data is compromised or accessed unlawfully.

This includes encrypting data stored in databases, file systems, and cloud storage services, as well as encrypting data transmitted over networks using secure protocols such as Transport Layer Security (TLS) or Virtual Private Networks (VPNs). Additionally, organizations may implement data masking techniques to obfuscate sensitive data elements within datasets, further reducing the risk of data exposure during processing or analysis. Thirdly, comprehensive monitoring, logging, and auditing capabilities are essential elements of data infrastructure security, enabling organizations to detect and respond to security incidents in a timely manner.

This involves implementing robust logging mechanisms to capture events and activities across the data infrastructure, as well as deploying intrusion detection systems (IDS) and security information and event management (SIEM) solutions to analyze logs and detect suspicious behavior or anomalies indicative of potential security breaches. By establishing real-time alerts, conducting regular security audits, and performing incident response drills, organizations can proactively identify security threats, mitigate risks, and maintain the integrity and resilience of their data infrastructure in the face of evolving cybersecurity threats.

| Previous: Example of an Advanced Visualization |

Next: Mobile

BI

|