What Goes Into a Software Testing and QA Report

As more and more companies move to digital solutions, among the most important elements for a successful product launch are software testing and quality assurance (QA). Quality control processes create the foundation necessary for building reliable applications.

While many elements go into quality assurance, from developing test plans, executing tests, and debugging issues to root cause analysis, each piece helps build confidence in the product when deployed to your customers. But how do you track that progress throughout each step of development?

The answer lies in creating and utilizing an effective QA report.

| #1 Ranking: Read how InetSoft was rated #1 for user adoption in G2's user survey-based index | Read More |

Testing Methodology

The testing phase of a project ensures that a product is high-quality and meets all the requirements. This phase includes manual testing, which is done by a human, or automated testing, where software tools are used to execute tests. Other forms include performance testing, where the system is assessed under various stress levels to determine its capacity limits.

Each method must align with the project's goals and be modified when needed. For example, automated testing can quickly assess basic functionality if the project is time-sensitive. On the other hand, if the project requires a human touch to evaluate properly, manual testing is more effective.

5 Different Software Testing Methods

1. Unit Testing

The most granular level of software testing, unit testing, focuses on individual parts or units of code. Developers create test cases to verify the accuracy of small pieces of code independently. These tests guarantee that each component operates as expected in finding issues early in the development cycle. Test-Driven Development (TDD), in which tests are developed before the real code, is not complete without unit testing.

2. Integration Testing

Integration testing examines how various software application modules or components interact. It guarantees that the integrated components function properly together and that information moves between them as intended. Different approaches can be used for integration testing, such as top-down, bottom-up, or a combination of both. Testing of this kind helps discover interface flaws, data transfer challenges, and unanticipated component interactions.

3. Functional Testing

Software's capabilities are evaluated during functional testing to make sure they comply with the requirements. Test cases are created to address a variety of use cases, inputs, and scenarios. User interface, API, and database testing are just a few examples of the various layers that functional testing may cover. This approach makes sure end users can complete their intended duties without running into functional issues.

4. Performance Testing

Performance testing assesses the software's scalability, stability, and responsiveness in various scenarios. This includes:

- stress testing to push the software to its limits

- load testing to evaluate performance under anticipated user loads

- scalability testing to determine the product's capacity to manage rising demand.

Performance evaluations identify bottlenecks, resource constraints, and prospective problems that can impact the product's overall effectiveness.

5. Security Testing

Software resistance to different security threats and vulnerabilities is evaluated during security testing. It includes many testing techniques like vulnerability scanning, penetration testing, and security code reviews. Software flaws that might allow for unauthorized access, data breaches, or other security breaches are found through security testing.

Test Environment and Data

The environment and data used for testing software are frequently overlooked components. To make sure that they are compatible with the application that has been tested, the hardware, software, operating systems, and browsers must be carefully chosen.

How to select them properly?

Consider the application's requirements, target audience, and usage statistics. Ensure diversity in devices, screen sizes, and browsers to mimic real-world scenarios. Test on popular browsers like Chrome, Firefox, Safari, and Edge, covering various operating system versions. Weight compatibility testing tools and user feedback, and update your testing matrix. Equally important is the quality and relevance of the sample data used during testing. The information must include examples from the real world and be varied to cover all possible use scenarios. Testing in a secure atmosphere helps increase the software's dependability by ensuring it operates effectively in all circumstances.

Steps to Creating an Optimal Software Testing Environment

1. Requirement Analysis

This step involves understanding the testing goals and requirements, including the types of tests to be conducted, the software components involved, and the data needed.

2. Environment Configuration

Create a test environment configuration plan based on the gathered requirements. This plan includes setting up servers, databases, networking, and software components that replicate the production environment. Ensure that the chosen environment closely resembles the production setup to ensure accurate testing.

|

View live interactive examples in InetSoft's dashboard and visualization gallery. |

3. Software Installation and Configuration

Install the required software elements, including databases, application servers, web servers, and operating systems. Configure these elements' security settings, application settings, and connection parameters in accordance with the prerequisites listed.

4. Data Preparation and Migration

Prepare the test data required for different testing scenarios. This data should cover various cases, including normal usage, edge cases, and boundary conditions. Migrate or replicate production data if needed, anonymizing sensitive information to protect privacy.

5. Initial Testing and Validation

Execute initial tests in the configured test environment to ensure its functionality and readiness for testing. Test the software's basic operations, interactions, and integrations to identify setup issues or anomalies.

Bug Tracking and Issue Management

This step ensures that the software is high quality and performs as expected. Severity and priority levels to issues are assigned to determine their impact on the software. Severity measures the extent to which a bug can affect the software's functionality, while priority levels indicate the order in which issues should be addressed. By applying these levels, developers can address issues to ensure the software meets user requirements.

Here are some effective ways to manage bugs and issues in your projects:

Bug Tracking Tools: Use bug tracking software like Jira, Bugzilla, or Trello to log, track, and manage bugs and issues throughout the development cycle. These tools provide issue categorization, assignment, status tracking, and reporting features.

Clear Issue Descriptions: When reporting an issue, provide a clear and detailed description. Include steps to reproduce the problem, expected behavior, and observed behavior. This will help developers understand the issue quickly.

Prioritization and Severity: Classify bugs based on their severity and impact. Categorize them as critical, high, medium, or low priority. This allows the development team to focus on critical issues first and maintain an organized approach to fixing problems.

Regular Status Updates: Keep stakeholders informed about the status of bugs and issues. Regularly update the issue tracker with progress, status changes, and resolutions. This transparency improves communication and accountability.

Version Control Integration: Integrate your bug-tracking system with version control tools like Git. This connection allows developers to associate code changes with specific issues, making tracking fixes and maintaining a historical record easier.

Testing and Validation: Verify the bug is resolved before closing an issue. This process involves manual or automated tests to confirm that the issue no longer exists and that the solution didn't introduce new problems.

Documentation and Knowledge Base: Maintain a well-organized documentation and knowledge base of resolved issues. This repository can be a reference for future troubleshooting and help identify patterns.

Feedback Loop: Gather feedback from users and testers after a bug is resolved. This feedback can validate the fix and provide insights into the user experience.

|

Read the top 10 reasons for selecting InetSoft as your BI partner. |

Test Results and Findings

After testing, the results and findings are finally in - quite revealing. The extensive data provides a clear picture of what went right and wrong during the testing process. Meanwhile, the qualitative information delves deeper into what users found particularly confusing or intuitive.

However, the critical issues, defects, and vulnerabilities that can be discovered during the testing truly stand out. These range from small glitches to major systemic flaws that require immediate attention.

How to write test results and findings?

When documenting test results and findings, it's important to convey the outcomes of your testing in a clear and organized manner.

- Start with a concise title indicating the specific test scenario or component being evaluated and the testing date for context.

- Introduce the scope and objectives of the testing briefly.

- Detail the test environment, outlining the hardware, software, and configurations used.

- Enumerate the executed test cases, specifying whether each passed, failed, or was blocked due to external factors.

- For failed or blocked cases, provide comprehensive findings describing observed behavior error messages and, if applicable, attach relevant screenshots or logs.

- Assign severity and priority levels to issues to aid in prioritization.

- Outline the steps to reproduce each issue and contrast the expected behavior with the actual behavior encountered.

- Conclude by summarizing the overall results, indicating passed and failed percentages, and outlining potential next steps, like retesting or further analysis.

- Express gratitude for the collaboration of team members and stakeholders, ensuring an open and effective communication channel for issue resolution and improvement.

|

View a 2-minute demonstration of InetSoft's easy, agile, and robust BI software. |

Performance Metrics

Response times, the outcomes of load tests, and system stability are all examples of performance measures.

Response Times

Response times are a performance measure example that measures the speed at which a software application responds to user actions or requests. This metric is particularly relevant for applications where user experience is important, such as web applications, mobile apps, and online services. Short response times contribute to a better user experience, while prolonged response times can lead to user frustration.

During performance testing, response times are measured across various scenarios, including normal usage, peak load, and stress conditions. These tests help identify bottlenecks, latency issues, and potential points of failure that can impact response times.

Performance engineers analyze response time data to optimize system components, database queries, network interactions, and other factors influencing the software's responsiveness. This contributes to user satisfaction, engagement, and the overall success of their software products.

Outcomes of Load Tests

Load testing evaluates a software application's performance under anticipated user loads and concurrent usage. The outcomes of load tests provide valuable insights into the system's capacity, scalability, and performance characteristics. Load testing involves gradually increasing the user load to assess how the application handles traffic spikes and heavy usage periods.

The load test outcomes include:

- Response times.

- Throughput (transactions processed per unit of time).

- Error rates.

- Resource utilization (CPU, memory, network).

Load tests help identify the system's breaking point, where performance degrades or errors occur. By analyzing load test outcomes, development teams can determine the system's limitations, optimize resource allocation, scale infrastructure, and implement necessary performance improvements.

Read what InetSoft customers and partners have said about their selection of Style Scope for their solution for dashboard reporting. |

System Stability

System stability is a performance measure that assesses the software's ability to maintain consistent functionality and responsiveness over extended usage periods. A stable system operates smoothly without crashes, hangs, or unexpected behavior. System stability is especially crucial for continuously running applications, such as servers, online services, and mission-critical systems.

During performance and stress testing, the system's stability is monitored by observing error rates, memory leaks, resource exhaustion, and other signs of instability. The goal is to ensure the application remains responsive and available even under heavy loads or adverse conditions.

Detecting and addressing stability issues before deployment is necessary to prevent disruptions, data loss, and user negative impacts. Organizations build trust in their software's reliability by achieving system stability, contributing to user satisfaction and long-term success.

QA Reports

Quality assurance (QA) provides a detailed insight into the areas of improvement and any potential risks that need to be addressed. Companies use such reports to maintain their competitiveness in the industry.

QA reports are also useful in showing compliance with regulations and standards. The insights provided by QA reports can help businesses save time, money, and resources by catching issues early in the process, leading to better products and happier customers.

How to Write QA Reports

Customized Communication

Write QA reports with your audience in mind, whether developers, managers, or executives. Tailor technical details and language to match their understanding and priorities.

Structured Approach

- Organize your report coherently.

- Begin with an introduction that outlines objectives and scope.

- Cover testing methodologies, results, findings, and recommendations.

- Conclude with insights and future steps.

- Clear and concise

Communicate findings clearly and concisely. Use bullet points, headers, and visual aids like graphs to make information easily digestible.

In-Depth Findings

Detail findings meticulously. Include issue descriptions, steps to reproduce, and expected vs. actual behavior. Attach relevant visuals to enhance clarity.

Actionable Recommendations

Offer actionable recommendations for resolving issues. Suggest potential solutions, workarounds, or improvements to address identified problems.

Impact Analysis

Analyze the impact of issues on functionality and user experience. Describe how each issue might affect end-users or project goals.

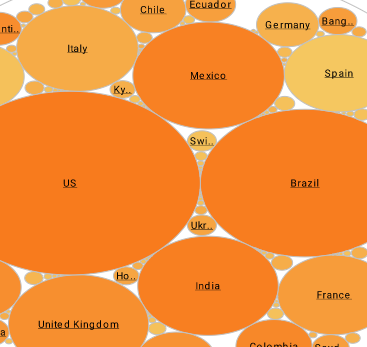

Visual Aids

Incorporate visuals like charts or graphs to present data effectively. Visual aids can enhance understanding and highlight key points.

Proofreading and Review

Thoroughly proofread and edit the report. Correct grammar and spelling errors, and ensure technical terms are explained for non-technical readers.