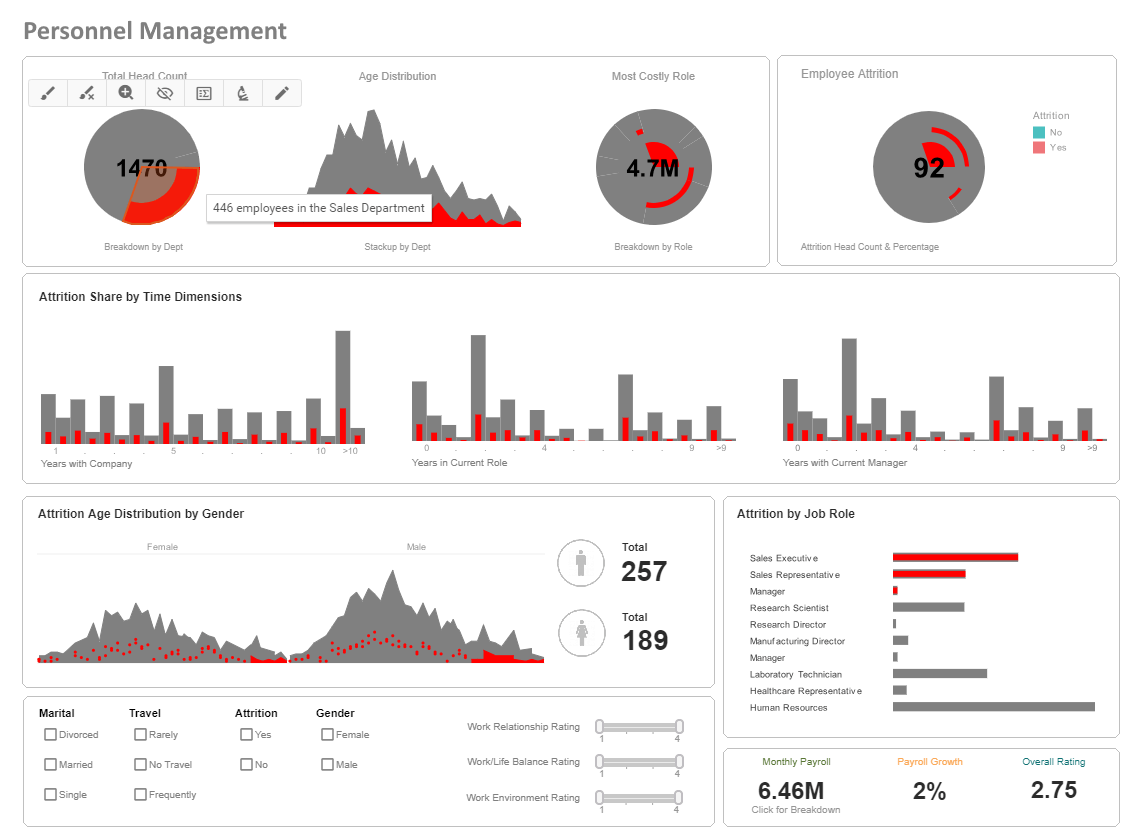

InetSoft Webinar: Adding New Data Sources to Enrich Information Dashboards

This is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of "Agile BI: How Data Virtualization and Data Mashup Help" The speaker is Mark Flaherty, CMO at InetSoft.

Part of the value here is to quickly add new data sources to enrich this informational dashboard. Obviously that’s happening as a batch process using the scheduler and the changes to those data models are accomplished much more rapidly. But from an actual service perspective that also integrates back through the virtualization layer with real-time data from social media, Wikipedia, Facebook, Twitter, Foursquare, Check-In, Check-Out, likes, dislikes, and Twitter activity.

So as people are navigating in real time, they can actually pull up what does Wikipedia say about that church? Oh it’s gothic architecture. That’s interesting, I am going to stop and take a look, if I am in tourist mode. Or if I am in business lunch, I am looking for offers that are contextual. Or if I am in a social mood, I can look at which of my friends, which restaurants are in this neighborhood.

So the possibilities are endless and the ability to bring those disparate types of information together is pretty critical. The goals were to easily access to disparate data, gain agility, provide real time data services, and also incorporate new feeds, and deliver high performance and scalability for large data volumes. It meant creating a hybrid approach for stable location data using a data warehouse and combine more semi-static feeds into them as a real time integration using virtualization. The benefits we have already talked about are pretty immense in this case.

| #1 Ranking: Read how InetSoft was rated #1 for user adoption in G2's user survey-based index | Read More |

Golden Record of the Customer

And for another example, I am going to switch away after this from transactional applications to master data management. Lots of organizations are spending millions of dollars doing this, and that’s because it is so important to have this clean golden record of the customer. The idea here is that you would only keep maybe 10 attributes of the entity that you are trying to master in a physical hub, the other 100 might come through virtualization across the broad range of data sources to effectively use real-time matching replication and de-duplication rules to create a federated view of that entity. You can choose to have very little, just the keys, a little more like core information such as name, address, phone number or a little bit more, enriched, but the rest of the transactional information as well as entity information would come in through virtualization. We have some white papers on this, I am going to not dwell on this, but that’s another classic business informational use of data virtualization.

And we will skip pass some of the big data examples, although they are very important, but again the concepts are similar, leveraging big data within the enterprise, which may mean log files, or unstructured content, or cloud-hosted data, which could be MySQL systems, MapReduce type systems, which Hadoop is an implementation of, and the question is how do you actually bring them together and then republish either to the Cloud or to your enterprise of users?

|

View live interactive examples in InetSoft's dashboard and visualization gallery. |

Again, we have some papers on this, we can go more deeper into. But the idea is to push the business intelligence to the edges, use caching and reuse strategies, while providing a capability to access multiple diverse data sources.

Coming to transactional applications, we find that there are two patterns that are predominant. These are not the only patterns, but these are two very predominant patterns. The first pattern is one of providing contextual data to a transaction or process systems. In this case, I have picked a customer example that’s providing a call center application. The call center agent might go through multiple screens. This could just as well be an insurance company agent providing underwriting on a policy or adjudicating a claim, or it could be loan officer approving a loan in a bank. In financial services, what have you, or you could be doing a pricing approval for a large contract in a manufacturing kind of system.

| Previous: Examples of Data Mashups |