Best in Breed Modeling Tool

This is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of "Data Science and Data Scientists." The speaker is Abhishek Gupta, Product Manager at InetSoft.

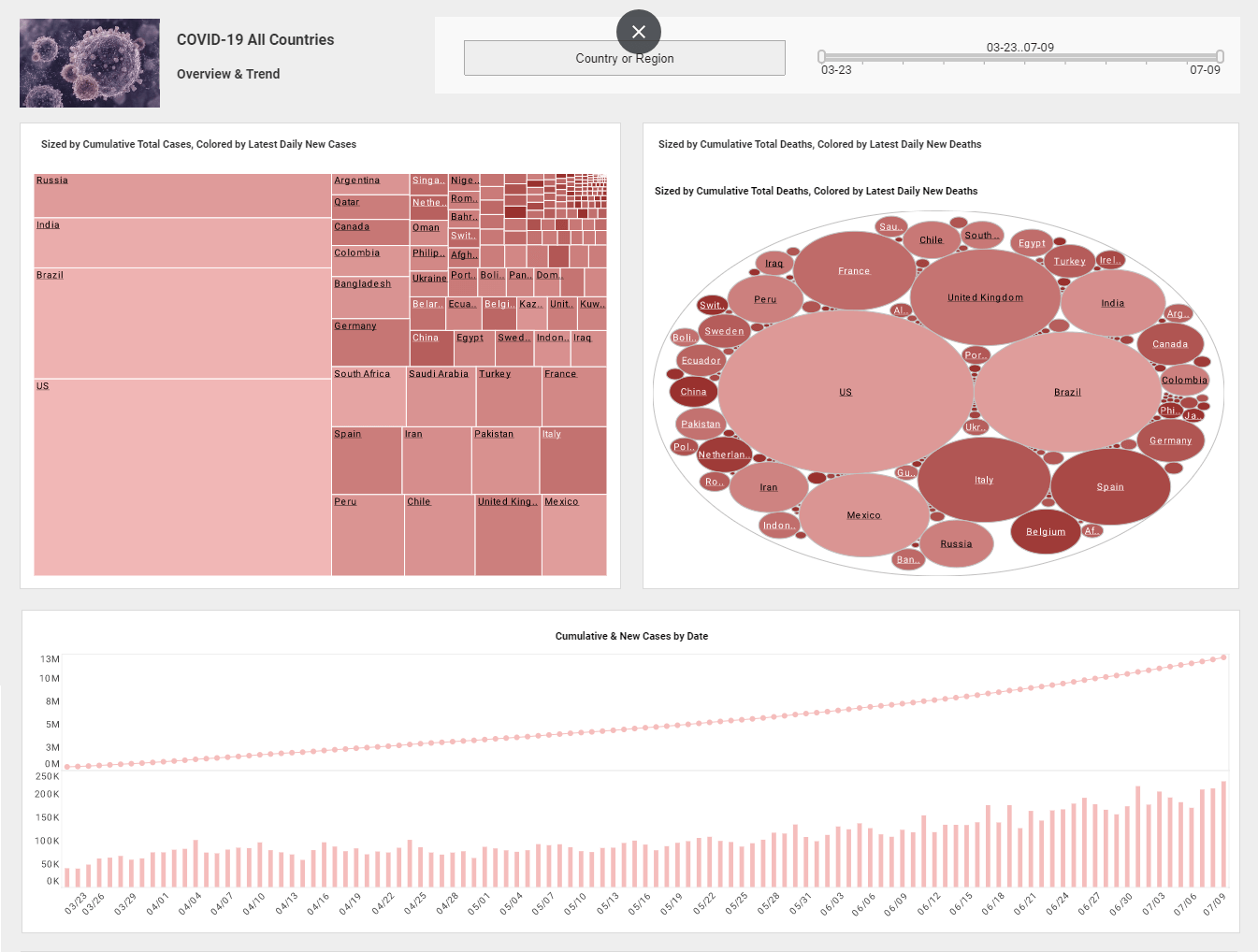

That’s a big part of what they do. They got to understand the right algorithms, but just as important you need strong visualization tools so they can see the patters and the charts and the graphics of the trend lines and the heat maps and everything else that a good data mining statistical modeling tool kit, will provide. This is another component included in InetSoft's solution. So at the very highest level those are the core tools that a data scientist needs to do their job and be productive.

What else can I think of in a core curriculum that budding data scientist should have in their toolkit? Well, it's not so much a tool, but it's a frame of reference. They have to understand their business context. They are not building statistical models for fun, for their own fun and amusement. But it's how it work. They need to understand the business context, the business application, what the business is paying them for.

If the business is paying for them to build a churn model to look at causes and variables of customers leaving or customers staying, then they need to understand the products and services the company is providing. The data scientist needs to learn a fair amount about customer churn as a business issue. So they need to acquire an understanding of that. That area is what we called the subject domain.

They need to understand the high level business metrics. They are trying to improve an outcome. They are trying to achieve a goal which is improve loyalty, improve retention, improve the rate at which customers accept offers that are made to them. They might reduce the rate at which customers are abandoning shopping carts in the customer portal.

Whatever the outcome is that the business wants to achieve from practical standpoint, the data scientist needs to understand. The data scientist needs be laser focused on that, and that’s the be all and end all. It's not important that neural networks are inherently better in regression, or that logistic regression models are better, or what the underlying algorithm is. There’s many ways to skin the cat of building a model, a model that fits the data.

In the final analysis the data scientist should not develop a fetish for any given statistical modeling approach, and they should have a familiar of the strengths and weaknesses or the applicability of different modeling approaches and modeling tools depending on the business problem.

The last point to make is that you definitely need class room instruction on a topic like this. There’s a significant learning curve. Class structure is important to become a high quality certified data scientist. But hands on experience, as well, is key. It’s like for flying a plane. There are only so many simulations you can do before you have got to get in the cockpit, and you have got to fly a real plane around and hopefully not crash it.

You need hands on laboratory work. You need to be a truly well rounded data scientist and ideally, this is how you’re also getting on the job experience. That will deepen your chops in building good statistical models and tuning them to the data. So keep that in mind: hands-on experience is critically important.

What Is New in Data Modeling Tools in 2024

Data modeling tools have evolved significantly in 2024, driven by advancements in technology and the increasing complexity of data environments. Here are some of the notable new features and trends in data modeling tools this year:

1. AI and Machine Learning Integration

- Automated Model Generation: Tools now leverage AI and machine learning to automate the creation of data models. These tools can analyze existing data structures and generate optimized models, reducing the time and expertise required.

- Predictive Analytics: Data modeling tools are incorporating predictive analytics capabilities, allowing users to build models that can predict future trends and behaviors based on historical data.

2. Enhanced Collaboration Features

- Real-Time Collaboration: Modern data modeling tools support real-time collaboration, enabling multiple users to work on the same model simultaneously, similar to how Google Docs allows for collaborative document editing.

- Version Control: Advanced version control systems are integrated, allowing teams to track changes, manage versions, and revert to previous states if necessary.

3. Cloud-Native and Hybrid Solutions

- Cloud Integration: Many tools are now cloud-native, offering seamless integration with cloud platforms like AWS, Azure, and Google Cloud. This integration facilitates easier access to data, scalability, and collaborative efforts across geographically dispersed teams.

- Hybrid Capabilities: Tools support hybrid environments, allowing organizations to manage data both on-premises and in the cloud, providing flexibility and control over data storage and processing.

4. Support for NoSQL and Big Data

- Diverse Data Sources: Data modeling tools now support a wide range of data sources, including NoSQL databases (like MongoDB, Cassandra) and big data platforms (such as Hadoop and Spark). This allows for comprehensive modeling across different types of data stores.

- Schema-On-Read: Tools are increasingly supporting schema-on-read paradigms, which are crucial for dealing with semi-structured and unstructured data typical in big data environments.

5. Advanced Data Governance and Security

- Data Lineage: Enhanced data lineage features allow users to track the origin and transformation of data throughout its lifecycle, ensuring transparency and compliance.

- Security Integration: Improved security features, including encryption, access controls, and compliance checks, are now standard, ensuring that data models adhere to regulatory requirements.

6. Visualization and Reporting

- Interactive Dashboards: Tools offer sophisticated visualization capabilities, allowing users to create interactive dashboards that provide insights into data structures and relationships.

- Customizable Reports: Users can generate customizable reports to share insights with stakeholders, enhancing communication and decision-making processes.

7. Low-Code and No-Code Capabilities

- User-Friendly Interfaces: Low-code and no-code features enable users without extensive technical backgrounds to create and manage data models through intuitive drag-and-drop interfaces.

- Template Libraries: Extensive libraries of pre-built templates and components help users quickly build models suited to various industries and use cases.

8. Metadata Management

- Unified Metadata Repositories: Tools now offer unified repositories for managing metadata, ensuring consistency and accuracy across different models and data sources.

- Automated Metadata Discovery: Automated discovery and cataloging of metadata help streamline the modeling process and maintain an up-to-date data inventory.

9. Integration with Data Warehousing and ETL Tools

- Seamless ETL Integration: Data modeling tools provide tight integration with ETL (Extract, Transform, Load) tools, facilitating smooth data migration and transformation processes.

- Data Warehousing Solutions: Integration with modern data warehousing solutions, such as Snowflake and Redshift, ensures that data models are optimized for performance and scalability.

10. Focus on Sustainability and Efficiency

- Energy-Efficient Processing: With a growing emphasis on sustainability, data modeling tools are optimizing algorithms and processing methods to reduce energy consumption and improve efficiency.

- Resource Management: Tools are incorporating features to monitor and manage the computational resources used in data modeling, ensuring efficient utilization and cost control.