InetSoft Webinar: Dropping Data Storage Costs Helped Spark the Big Data Trend

This is the continuation of the transcript of a Webinar hosted by InetSoft on the topic of "The Newest Buzz Word in BI: Big Data." The speaker is Abhishek Gupta, product manager at InetSoft.Here’s a topic that’s really interesting to me, how dropping data storage costs helped spark the Big Data trend. The idea is that we’re saving all this data now. Storage is really getting really cheap, and one of the reasons why relational databases got so popular back in the 70s and 80s and 90s is because it tries to save as much data in the least amount of space.

You have the relational concept of only saving data in on spot if you can do it. But now we’ve opened up to storing all the data all at once without trying to make it efficient. We just store everything.

There is this issue of no SQL associated with Big Data. The term no SQL, that’s another one that sometimes defies definition especially because the name is really misleading. No SQL databases by and large are databases that first of all, are non-relational and second of all, very often are set up in such a way so that you do not have to have a consistent schema from row to row to row to row.

Typically you will have some columns or fields in common across rows, but different rows may have extra data and then that’s a very strange concept if you’ve work in with relational databases as long as I have.

| #1 Ranking: Read how InetSoft was rated #1 for user adoption in G2's user survey-based index | Read More |

I bet you get used to a certain reality, and you know things go down with gravity. They don’t go up. At least you thought so. But in the no SQL world we have the ability to really have varied structure, and that turns out to be helping in the Big Data world because of the variety of data sources and the fact that typically they’re not relational either.

A big thing in Big Data and a big thing in no SQL, by the way, this is very interesting to me, is that it’s not so much that we don’t have schema, it’s that we decide what the schema is when we do our analysis. So the schema gets decided at query time because there are different ways especially with data in log files and sources like that. There are different ways to interpret the data and to imagine where one field ends and another one begins. So if we can store things without enforcing a schema, that gives us more flexibility at the time of analysis.

That brings up the whole idea of OLAP cubes and in Microsoft’s world, it would be analysis services, and we all know SQL Server probably isn’t a great Big Data analyzer, but can you use take some of the concepts from Big Data and bring them into an analytical platform like InetSoft's.

I’ve been working with analysis services since it was called the OLAP services when it was part of SQL

seven. I would say just to date myself, but I always date myself, so it’s kind of an assumed thing.

But you know Microsoft is working on its own distribution of Hadoop that will run on Windows and

part of what goes on there is that there is a connection through ODBC where the new so called tabular mode of

analysis services, which is very much based on the technology in powerpivot, that can actually speak through

that ODBC driver to Hadoop.

|

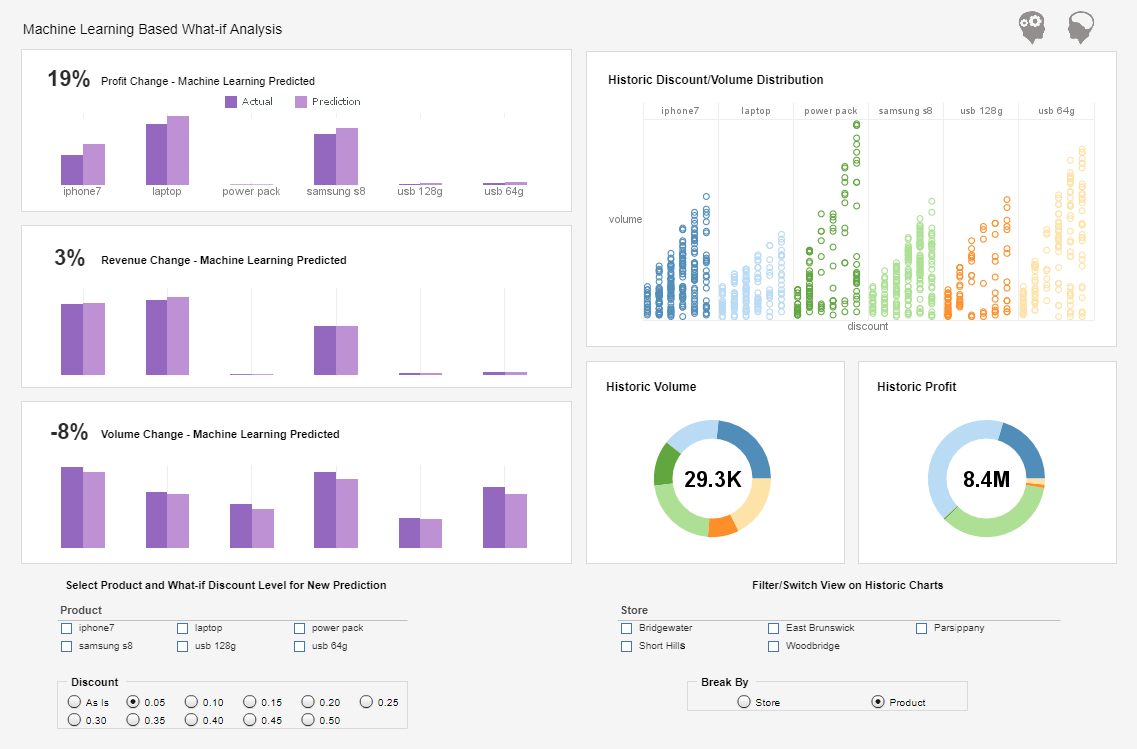

View live interactive examples in InetSoft's dashboard and visualization gallery. |

So there are definitely ways to pull Big Data down into cubes. The other thing that people point to is that an edition of SQL Server with the relational database could be a Big Data tool. That something is called the SQL Server parallel data warehouse edition. Parallel data warehouse edition, also known as PDW, is one of a few different products out there in the industry that uses an architecture called the MPP which stands for Massively Parallel Processing.

In essence, when you buy PDW what you’re really buying is a cabinet with a whole bunch of SQL Server instances inside of it and one node at the front where you give it a query, and it chops it up into little bits and sends each little bit to one at the many SQL Servers. Inside the plans and all those little bits execute in parallel, and then all the data gets put back together again.

| Previous: The Newest Buzz Word in BI: Big Data |