InetSoft Article: Simple Models of Neurons

By Larry Liang, CTO at InetSoft

In this article in the continuation of the educational series on machine learning, I am going to describe some relatively simple models of neurons. I will describe a number of different models starting with simple linear and threshold neurons and then describing slightly more complicated models. These are much simpler than real neurons, but they are still complicated enough to allow us to make neural nets to do some very interesting kinds of machine learning.

In order to understand anything complicated we have to idealize it. That is, we have to make simplifications that allow us to get a handle on how it might work. With atoms for example, we simplify them as behaving like little solar systems. Idealization removes the complicated details that are not essential for understanding the main principles.

It allows us to apply mathematics into making analogies to other familiar systems. And once we understand the basic principles, it's easy to add complexity and make the model more faithful to reality. Of course, we have to be careful when we idealize something not to remove the thing that is giving it is main properties.

|

View a 2-minute demonstration of InetSoft's easy, agile, and robust BI software. |

For many of them, if you look at them, you can see that if you knew they were handwritten digit you would probably guess they were 2's, but it's very hard to say what it is that makes them 2's. There is nothing simple thaIt's often worth understanding models that are known to be wrong as long as we don't forget they are wrong. So for example, a lot of work on neural networks uses neurons to communicate real values rather than discreet spikes of activity, and we know cortical neurons don't behave like that, but it is still worth understanding systems like that, and in practice it can be very useful for machine learning.

The first kind of neuron I want to tell you about is the simplest. It's a linear neuron. It's simple. Its computation is limited in what it can do. It may allow us to get insights into more complicated neurons, but it may be somewhat misleading. So in the linear neuron the output Y is a function of a bias of the neuron B and the sum of all its incoming connections of the activity on an input line times the weight on that line. That's the synaptic weight on the input line.

And if we plot that as a curve, then if we plot on the X-axis the bias plus the weighted activities on the input lines, we get a straight line that goes through zero. Very different from linear neurons are binary threshold neurons that were introduced by McCulloch and Pitts. They actually influenced von Neumann when he was thinking about how to design a universal computer. In a binary threshold neuron you first compute a weighted sum of the inputs, and then you send out a spike of activity if that weighted sum exceeds the threshold.

McCulloch and Pitts thought that the spikes were like the truth values of propositions. So each neuron is combining the truth values against other neurons to produce the truth value of its own, and that's like combining some propositions to compute the truth value of another proposition. At the time in the 1940s, logic was the main paradigm for how the mind might work. Since then people thinking about how the brain computes have become much more interested in the idea that the brain is combining lots of different sources of unreliable evidence, and so logic isn't such a good paradigm for what the brains up to.

|

Learn how InetSoft's data intelligence technology is central to delivering efficient business intelligence. |

For a binary threshold neuron, you can think of its input-output function as if the weighted input has to be above the threshold in order to get an output of one. Otherwise it gives an output of zero. There are actually two equivalent ways to write the equations for binary threshold neurons. We can say that the total input Z is just the activities on the input lines times the weights, and then the output Y is 1 if that Z is above the threshold and zero otherwise.

Alternatively, we could say that the total input includes the bias term. So the total input is what comes in on the input lines times the weights plus this bias term, and then we can say the output is one if that total input is above zero and is zero otherwise. The equivalence is simply that the threshold in the first formulation is equal to the negative of the bias in the second formulation.

A kind of neuron that combines the properties of both linear neurons and binary threshold neurons is a rectified linear neuron. It first computes a linear weighted sum of its inputs, but then it gives an output that is a non-linear function of this weighted sum. So we compute Z in the same way as before. If Z is below zero we could output as zero otherwise we give an output that is equal to Z.

So above zero it is linear, and at zero it makes a hard decision. So the input output curve looks like this. It's definitely not linear, but above zero it is linear. So with the neuron like this we can get a lot of the nice properties of linear systems when it's above zero. We can also get the ability to make decisions at zero.

The neurons that are probably the most common kinds of neurons to use in artificial neural nets are sigmoid neurons. They give a real value output that is a smooth and bounded function of that total input. It's typical to use the logistic function where the total input is computed as before as a bias plus what comes in on the input lines weighted.

|

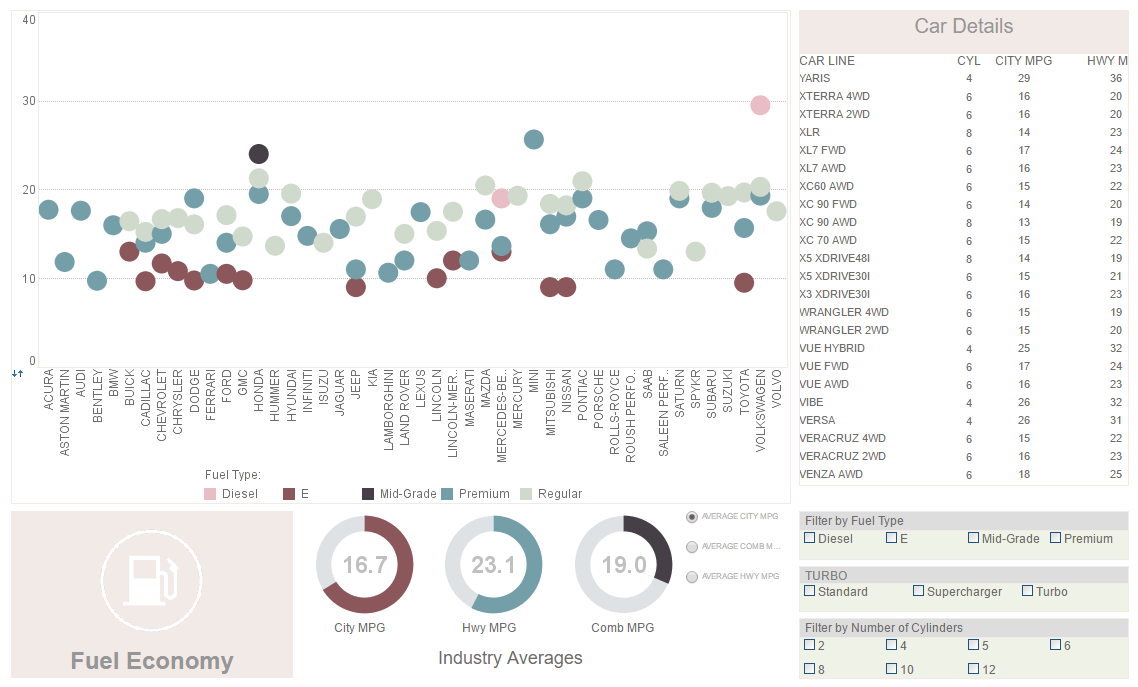

View live interactive examples in InetSoft's dashboard and visualization gallery. |

The output for a logistic neuron is one over one plus e to the minus total input. If you think about that, if the total input is big and positive, e to the minus a big positive number, and so the output will be one. If the total input is big and negative, and e to the minus a big negative number, it is a large number, and so the output will be zero.

So the input output function looks like this. When the total input zero e to the minus zero is one. So the output is a half. The nice thing about a sigmoid is it has smooth derivatives. The derivatives change continuously, and so they are nicely behaved, and they make it easy to do learning as we will see later on.

Finally there are the stochastic binary neurons. They use just the same equations as logistic units. They compute their total input the same way, and they use the logistic function to compute a real value which is the probability that they would output to spike. But then instead of outputting that probability as a real number, they actually make a probabilistic decision, and so what they actually output is either a one or a zero.

They are intrinsically random. They are treating the P as the probability of producing a one, not as a real number. Of course, if the input is very big and positive they would almost always produce a one, and if the input is big and negative they will almost always produce a zero. We can do a similar trick with rectified linear unit.

We can say the output, this real value that comes out of the rectified linear unit, if its input is above zero, is the rate of producing spikes. So that is deterministic, but once we figure out this rate of producing spikes, the actual times at which spikes are produced is a random process. So the rectified linear unit determines the rate but intrinsic randomness in the unit determines when the spikes are actually produced.

| Previous: The Inspiration for Artificial Neural Networks |